Lately, Groq has been garnering significant attention from our clients for its Inference Technology. Groq has raised a total of $367 million across multiple funding rounds, with the most recent Series C round bringing in $300 million. Groq’s valuation is approximately $2.5 billion.

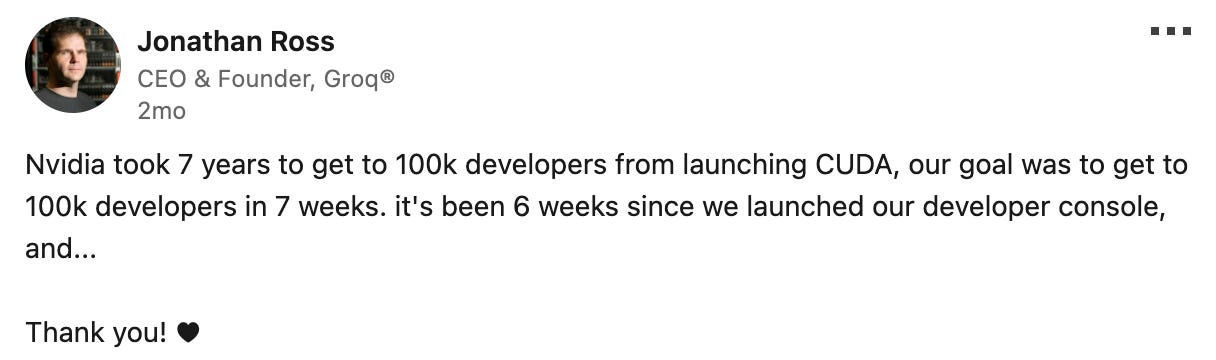

Groq has 70,000 new developers are using Playground and more than 19,000 new applications are running via the Groq API. This company, founded by ex-Google engineers, has been making waves in the AI hardware industry, growing at an impressive pace that rivals and even surpasses the early growth trajectory of NVIDIA.

Groq's Inference demonstrates a remarkable performance, serving LLaMA 3 at a rate of over 800 tokens per second. This speed is significantly faster than OpenAI's GPT-4, which operates at approximately maximum of 70 tokens per second.

In this article, we will review:

What is the Outlook for the AI Chip Industry?

Tokens per Second and Why does it Matter in Inference?

True Differentiator: Groq’s Unique SRAM Memory (unlike NVIDIA’s HBM)

Can NVIDIA's Monopoly Be Challenged?

Please note: The insights presented in this article are derived from confidential consultations our team has conducted with clients across private equity, hedge funds, startups, and investment banks, facilitated through specialized expert networks. Due to our agreements with these networks, we cannot reveal specific names from these discussions. Therefore, we offer a summarized version of these insights, ensuring valuable content while upholding our confidentiality commitments.

What is the Outlook for the AI Chip Industry?

Per Gartner, The total addressable market for AI chips is projected to reach $119.4 billion by 2027. Currently, approximately 40% of AI chips are utilized for inference, positioning the TAM for inference-specific chips at around $48 billion by 2027.

As AI applications mature, this % will change to,